When AI came out, every person in the marketing industry was either excited, nervous, or anxious about their jobs and productivity, or even worse, giving up.

The argument that “AI won’t take your jobs; someone with AI expertise will” was constantly discussed on LinkedIn and other social media.

Instilling fear of AI didn’t help anyone, but despite all this, one virus slipped through the cracks in the industry — AI detection.

Like in a Michael Bay movie, it went unnoticed until it was too late. There were no superfluous bangs or explosions, but a tiny little evil robot that slipped by, causing you to lose your reputation.

It can take years to build your reputation and one small bit to destroy it with a client or a prospect that finds value in you.

If one is unwilling to put effort into learning AI, using the evil of AI detection becomes even more normal and comfortable.

Yes, more important than putting energy into upskilling and learning skills that boost your business.

We’re already in the AI age, where OpenAI releases AI models like Apple releases products yearly. It’s only going to be better or worse from here, based on the lens of your worldview.

Because why tend to anything till it becomes a headache?

AI Detection: Autobots vs. Decepticons, and you’re stuck in between

On one end, there are people abusing AI so much – that you see them posting generic AI-generated comments.

All this is because some LinkedIn hustle bro told them to comment 50 times daily.

This level of fake engagement is humanly impossible and another point of desperation for people looking to find clients.

Abusing AI is fun for short-term gratification, but only because of the seed of being artificially productive that was planted in your mind, thanks to social media. But long term, if you’re not solving a business problem or something that affects your health, wealth, and relationships, it’s all just bells and whistles.

The same can be said about the AI detection scenario.

Humor me for a moment when I say that generative AI (LLMs) are Autobots (be convinced they’re good for us), and AI detection tools are the Decepticons.

Where you can use LLMs to create, refine, and modify your creative outlets in the world, the AI detection models seek to undermine everything you hold dear and near.

The question arises: Why not create your own content without any LLM involvement whatsoever? Well, in a perfect world, LLMs would help you without effort, and AI detector models would be 99% accurate. But they’re not.

People unaware of this would cause you more harm than good. As I said above, AI won’t take your jobs; someone with AI expertise will.

In this case, it’s –

AI detectors won’t ruin your reputation, someone with inaccurate information of such a model will.

AI detection is exactly this way: To beat it, put effort into:

- A decent long-term relationship with your clients

- Self-educate and learn data science and ML (at least at a beginner level)

- Work on lead generation that attracts people who know about these issues. Attract > Chase

So whether it is ZeroGPT, Originality.ai, or any other tool out there, free or paid, it basically involves you in this Autobots vs. Decepticons war.

It’s ironic, really, that when you should be working to upskill and master your skills and leave AI anxiety behind, your clients and the industry would rather doubt your capabilities with AI detection than use their own brains.

Let me explain how these AI detection tools work and the logic behind them.

Most importantly, why these aren’t just technically flawed, but logically flawed too, from the point of view of a data driven copywriter.

I’ll start with the logical flaws first so that you don’t have to scratch your head about the tech know-how.

AI Detection: The logical flaw and the practical way to beat this

Ever thought about how accurate these AI detection tools really are? They often claim to know what’s real and what’s AI-generated, but do they? Spoiler: not always.

Their so-called precision can sometimes be as reliable as a weather forecast—occasionally right, but often way off. Consider the case of OpenAI; even they had to retract their detection tool because it kept falsely tagging original content as AI-generated.

If the pioneers at OpenAI can’t get it right, should we trust these tools without question?

Marketing Landscape: The real problem behind the AI detection curtain

When SEO managers/heads of content, and other content managerial positions don’t educate themselves, and/or CEOs aren’t willing to keep up to date with everything, the problem of misinformation increases.

On one end of the spectrum, you have newcomers who’re already anxious and desperate to make a living through the content industry. The other end, experienced freelance marketing writers who have no issues with good clients who educate themselves about such issues.

It’s the middle rung of the ladder that’s broken, where newcomers can’t get by without an accident or an ankle slip (getting accused of using AI even if they’re not really doing it). On this very stage of the career ladder, there are people who are trying to climb the broken ladder without the means to fix it.

The FIX is as simple as educating your clients/managers you work with, and encouraging them to experiment before they subject you to the evil of being falsely accused of giving out AI detection.

PS- In case they’re not receptive and want to stick to misinformation, feel free to look into this lead generation guide, so that you’re not living in scarcity.

The shift in goalposts: Remember when great content was all about engagement, awareness, and persuasion?

Sentence length and correction with Hemingway, Grammarly scores, other tools and practices that are more vanity metrics than anything meaningful for the end user. Sure, they’re helpful and can refine your writing, but that’s the job for the editing phase.

You’re not nailing brand voice, talking like a human is supposed to, or even filling the information gap when all you care about are vanity metrics. Not what the audience needs, and the sole reason why SEOs panic each time Google releases a human connection update.

Behind the scenes look at the gears

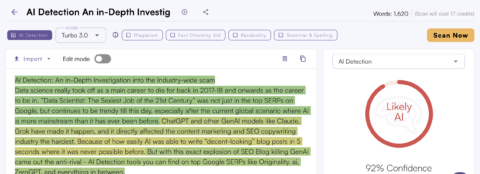

First let’s start with a paragraph, which will be our raw corpus data

Data science really took off as a main career to die for back in 2017-18 and onwards as the career to be in.

“Data Scientist: The Sexiest Job of the 21st Century” was not just in the top SERPs on Google, but continues to be trendy till this day, especially after the current global scenario where AI is more mainstream than it has ever been before.

ChatGPT and other GenAI models like Claude, Grok have made it happen, and it directly affected the content marketing and SEO copywriting industry the hardest. Because of how easily AI was able to write “decent-looking” blog posts in 5 seconds where it was never possible before.

But with this exact explosion of SEO Blog killing GenAI came out the anti-rival – AI Detection tools you can find on top Google SERPs like Originality.ai, ZeroGPT, and everything in between.

Where GenAI is supposed to be helpful and cut down research time, some agencies, individual marketers took to it as the absolute best they can do for quality to rank and monetize their blogging efforts.

Needless to say, quick and easy never stands the test of time, as evidently expressed by the Google march core update. Even before this update, these tools were soaring in popularity among those unlucky enough to be misinformed and/or as an excuse to add headache to the content creation process.

Step 1: Data Collection

- Training Data: The model learns from numerous documents that are labeled either as original or plagiarized.

- Example: The training dataset includes thousands of academic papers, journal articles, and blog posts, some of which are known to be plagiarized.

Step 2: Feature Extraction

In this step, the AI takes the text you provide and breaks it down into smaller, digestible pieces that it can analyze. Think of it like preparing ingredients before cooking a meal.

- Text Tokenization: The paragraph is split into individual tokens (words and punctuation marks).

- Example: [“Back”, “in”, “2017-18”, “data”, “science”, “really”, “started”, “to”, “become”, “the”, “career”, “everyone”, “was”, “talking”, “about”, …]

- N-Gram Analysis: The tool examines sequences of words (n-grams) to understand context and flow.

- Example Bigrams: [“Back in”, “in 2017-18”, “2017-18 data”, “data science”, “science really”, “really started”, …]

- Part-of-Speech Tagging: The tool identifies the grammatical roles of words (nouns, verbs, adjectives, etc.).

- Example: In the sentence “Data science really took off,” “Data” is a noun, “science” is a noun, “really” is an adverb, and “took off” is a verb phrase.

- Syntactic Parsing: The tool analyzes the sentence structure to understand how words are related.

- Example: In the sentence “ChatGPT and other GenAI models like Claude, Grok has made it happen,” the tool identifies “ChatGPT and other GenAI models” as the subject and “have made it happen” as the verb phrase.

AI Detection Models Start Getting Messy and Flawed from Here:

Step 3: Probability Calculation

3.1 Feature Vector Creation:

- The tool combines all the extracted features into a single numerical representation called a feature vector.

- This feature vector is what the machine learning model uses to make its prediction.

- Example: The feature vector might include values for lexical diversity, sentence length, presence of idiomatic expressions, etc.

3.2 Model Input:

- The feature vector is fed into a pre-trained machine learning model.

- Example: The model processes the feature vector to analyze the text’s characteristics.

3.3 Model Prediction:

- The machine learning model makes a prediction about whether the text is human-written or AI-generated.

- This prediction is based on the patterns and features the model has learned during training.

- Example: The model might recognize that the text’s high lexical diversity and varied sentence structure are typical of human writing.

3.4 Probability Score:

- The model outputs a probability score indicating the likelihood that the text is human-written or AI-generated.

- This score helps determine the final classification.

- Example: The model might output a score of 0.90, meaning it is 90% confident that the text is human-written.

No matter how many times you rewrite your content to fit the AI detection algorithm passing score, the logic is flawed. So you can either work to fix the algorithm on a daily basis or channel your energy in making better targeted content.

Biased Training Data

- Issue: If the AI was primarily trained on specific types of text (e.g., academic papers), it may not accurately evaluate diverse writing styles or languages.

- Example Flaw: Informally written blog posts can be flagged compared to formal academic papers because they differ in styles and word usage patterns. If you’re writing for academic papers, technical content, it would be flagged as AI generated.

The Misguided Notion of “Just Change a Few Sentences”

Now, let’s address the common but misguided advice that some freelancers or clients might give: “Just change a few sentences here and there” or “Do this until the AI detection score is less than 40-50%.”

Why this misconception doesn’t work behind the scenes

-

Superficial Changes Don’t Fool Advanced Models:

- Reality Check: Simply tweaking a few sentences won’t change the underlying features that the AI detection tool analyzes.

- Example: Changing “Data science really took off” to “Data science became popular” doesn’t alter the overall lexical diversity, sentence structure, or stylistic elements.

- The tool looks at a wide range of features, including part-of-speech tagging, syntactic parsing, lexical diversity, sentence structure, and stylistic elements. Superficial changes don’t affect these deep features.

-

Feature Extraction is Comprehensive:

- Reality Check: The tool looks at the entire text’s structure, flow, and style, not just individual sentences.

- Example: Even if you change a few words, the tool still sees the same patterns in sentence complexity and word variety.

- The tool’s analysis is comprehensive, examining the text’s grammatical roles, relationships between words, variety of vocabulary, and overall sentence construction.

- Reality Check: The tool looks at the entire text’s structure, flow, and style, not just individual sentences.

-

Probability Scores Reflect Overall Patterns:

- Reality Check: The probability score is based on the entire text, not just isolated parts. Changing a few sentences won’t significantly impact the overall score.

- Example: If the text is 90% likely to be human-written, changing a few sentences might only reduce that to 88%, which is still high.

- The feature vector created from the extracted features is fed into the model, which then outputs a probability score based on the overall patterns in the text.

- Reality Check: The probability score is based on the entire text, not just isolated parts. Changing a few sentences won’t significantly impact the overall score.

-

AI Models are Continuously Improving:

- Reality Check: AI detection tools are getting better at identifying subtle patterns that distinguish human and AI writing. Simple tricks won’t work for long.

- Example: Advanced models can detect inconsistencies and unnatural changes in the text, making superficial edits ineffective.

- The machine learning models used by AI detection tools are trained on large datasets and continuously updated to recognize new patterns and improve accuracy.

- Reality Check: AI detection tools are getting better at identifying subtle patterns that distinguish human and AI writing. Simple tricks won’t work for long.

End Result: Inaccuracies and Misinformation Galore

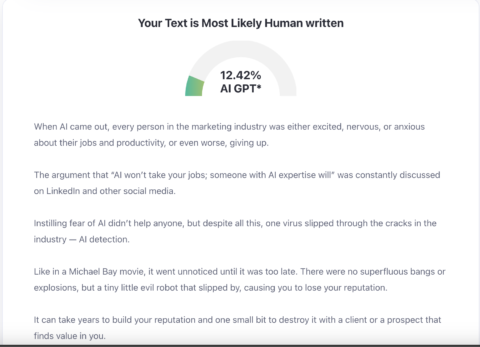

This section just showcases the above section when it’s checked for AI detection across the latest machine learning models implemented in different AI detection tools that tell you to trust them.

Feel free to copy-paste and check this or your own writing into these tools for time immemorial.

Bottom line: Whom to trust? Stranger human vs Stranger AI?

The only thing you should be looking at going forward is if the content can make sense, invoke the emotions and help your audience do what they’re out to do.

- Does it fit your brand voice?

- Does it make sense and can feel like a normal human in your culture and language would talk like?

- Are you looking to micro-manage every word and H2 on the page for conversion purposes?

- Can spot and feel “synergy”, “in the era of”, and other generic phrases pop up?

- Can you feel no depth and research in the content with what you’re used to?

- Are you able to see a clear difference between the quality of the samples and the work?

If you answer “Yes” to the last 3 questions, the content you’re receiving is mostly AI generated.

If you answer “No” to the first 3 questions, you shouldn’t be looking at AI detectors, but a heatmap and your engagement.

The End Game

AI detection models are NOT here to stay because humans don’t use an AI detector brain chip just yet. If a sentence is persuasive, it doesn’t matter if a bot generated it, or a human wrote it. Each word and sentence is there in your copy to do a specific job.

Move those two eyes to the next thing. The thing can be the next sentence, the next landing page, the next sales page or anything else. That’s it for this one, hope you enjoyed this, see ya in the next one!